A project by Patrick Kruse & Paul Kretschel

University of Applied Sciences Düsseldorf

with Prof. Anja Vormann

At the beginning of the 20th century, there were about 2800 synagogues and prayer rooms in the German Empire. In the nights of the November pogroms in 1938, a large number of places of worship were set on fire, in addition to many Jewish businesses and institutions.

On the night of 9th November 1938 and in the following days, around 1400 of these institutions were destroyed by the Nazis. Since then, the 9th of November has stood as a night of terror. It is regarded as the transition of social exclusion against people of Jewish faith to the systematic persecution in the dictatorship of the National Socialists. In the course of the Second World War, anti-Semitism culminated in the Holocaust, the industrialised genocide of some 6 million European Jews.

The AR Synagogue project aims to create a hybrid of a physical and digital place of remembrance. At former sites of Berlin synagogues, a digital portal to history is to open up and allow a glimpse into the synagogues and Jewish places of worship that existed before 1938. Represented by the entrance door of the respective building, users can take a look through the open door into the past.

Next to the door, only the interior can be seen. As a sign of the great loss, the outer façade is not visible.

It is meant to symbolise that the contents and the ideas of this culture have indeed suffered great damage, but that the Nazis were not able to wipe out the core of the culture. What they managed to do was damage the shell, but they were never able to completely extinguish Jewish life and culture, despite the greatest crimes.

Technical Implementation

The AR experience is going run on a mobile device like a tablet or smartphone. In case the project is going to be presented as an exhibition it might be helpful to provide such a device for the visitors at the location.

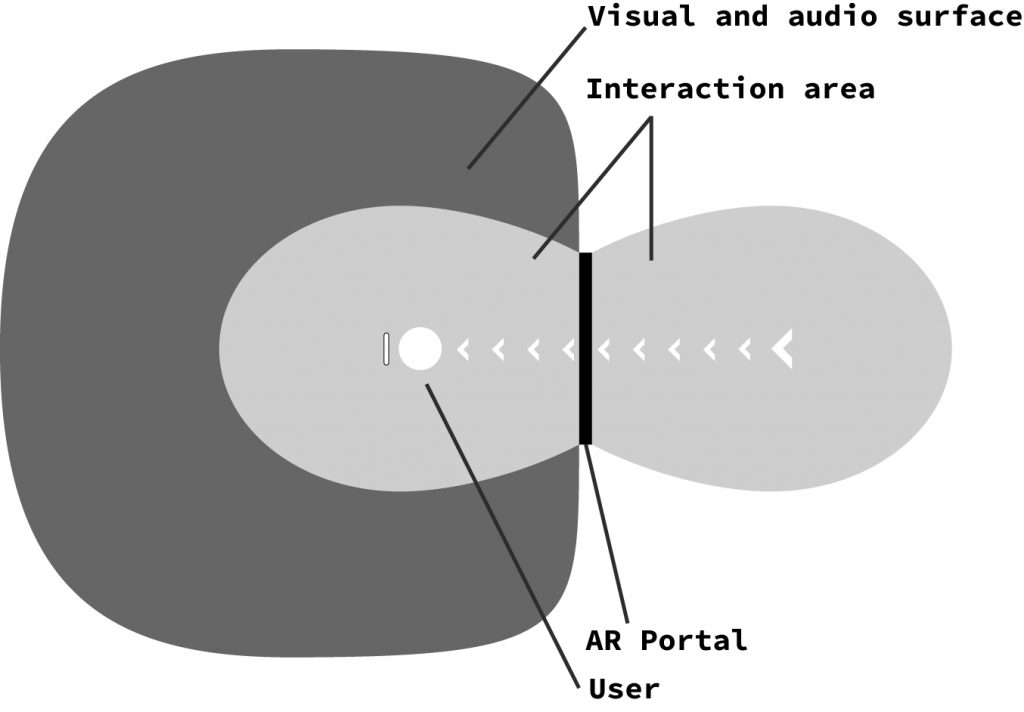

The app will display a virtual portal laying on top of the device’s camera output. The portal’s position will be determined by the location of a physical marker placed on the floor in the center of the presentation area. The marker is going to be an uniquely identifiable image made out of a robust material, so it can withstand people walking over it.

The viewer is encouraged to wear headphones because the AR experience will support spatial audio, which mainly originates at the portal’s position. The sounds are going to be composed of authentic audio recordings from real synagogues. The spatial nature of these sounds will draw the viewer’s attention towards the portal. The closer the viewer gets to the portal the louder the sounds become until the viewer eventually goes through the portal, at which point the sounds will completely surround the viewer, increasing his / her immersion into the experience.

After the viewer went through the portal, he / she can freely move through the virtual synagogue. Looking through the device’s screen, the real world will now be completely hidden behind the virtual content until the viewer decides to go back through the portal. In the virtual world it is important to communicate the environment’s spatial boundaries to the viewer because we don’t want the viewer to accidentally collide with the virtual content, since this would destroy the immersion. Also, the viewer should not wander too far away from the world’s center because there could be physical boundaries too (e.g. busy roads).

In order to provide a visual boundary that does not affect the immersion too much, we will display the virtual synagogue as a point cloud. This visual representation of a 3D environment will automatically dissolve into small distinct points as soon as the viewer gets too close (as shown in the video above).

Development Journal

01.05.2021

We have created a simple prototype of an app, in order to test the feasibility of our concept. When the app is started, the user will see the output video of the device’s camera, just like the default camera app on any smartphone. Layered on top of that the app shows a square window exposing a virtual room (currently consisting of randomly placed cubes). Looking through the window, the app will display the 3D content of the room correctly with regard to the device’s position and orientation. When the user moves through the window, he / she will be teleported to the virtual room.

Unfortunately, at the moment, whenever the teleportation takes place, the environment behind the window flickers into view for a few frames. This is very bad because it completely destroys the immersion of the user into the virtual world, so in the future we definitely have to find a fix for this issue.